Traditional data backup (from storage snapshots to backup software) may suffice for periodic, scheduled backups, or cold site (active-passive) disaster recovery. It’s great for backing up and restoring entire volumes of data, individual file systems, and (depending on your solution) individual files, emails, or records in a database, for example.

But if you need comprehensive file protection, fast recovery, and recovery points measured in seconds, traditional backup solutions aren’t going to cut it.

And in cases where you require an additional level of automated file protection — i.e., protecting all files across all locations in real-time — you may need a file replication or synchronization solution.

For example, most companies need a disaster recovery and high availability plan that provides both comprehensive data protection (i.e., meet the recovery points your company demands) and also fast recovery. Conventional approaches to both traditional backup and file replication introduce latency and bottlenecks and may expose (in some cases, numerous) points of failure — especially when it comes to protecting or replicating large files or many millions of files.

As a complementary solution to traditional backup and restore products, Resilio offers real-time, low latency file protection for resilience and fast recovery.

In this article, we’ll go deep on Resilio and the concept of real-time backup. You can also think about Resilio in the context of real-time replication — i.e., a way to protect files automatically across any number of locations. We’ll address how Resilio:

- Rapidly syncs files across all locations in real-time

- Enables you to deliver faster recovery time objectives (RTOs) and lower recovery point objectives (RPOs)

- Scales organically to provide enhanced performance as your file data — and thus, your sync environment — grows.

Our hope is that this article will give readers a better sense of what to look for in a real-time server backup service, whether or not you decide to go with Resilio.

If you believe that Resilio could be a fit for your organization, schedule a demo here.

P2P Real-Time Server Backup

Unlike other file replication and backup solutions, Resilio uses a peer-to-peer server synchronization architecture that can be deployed in a variety of configurations and on any type of connectivity, operating system (Mac, Windows, Android, Linux, etc.), storage, cloud, and data center location.

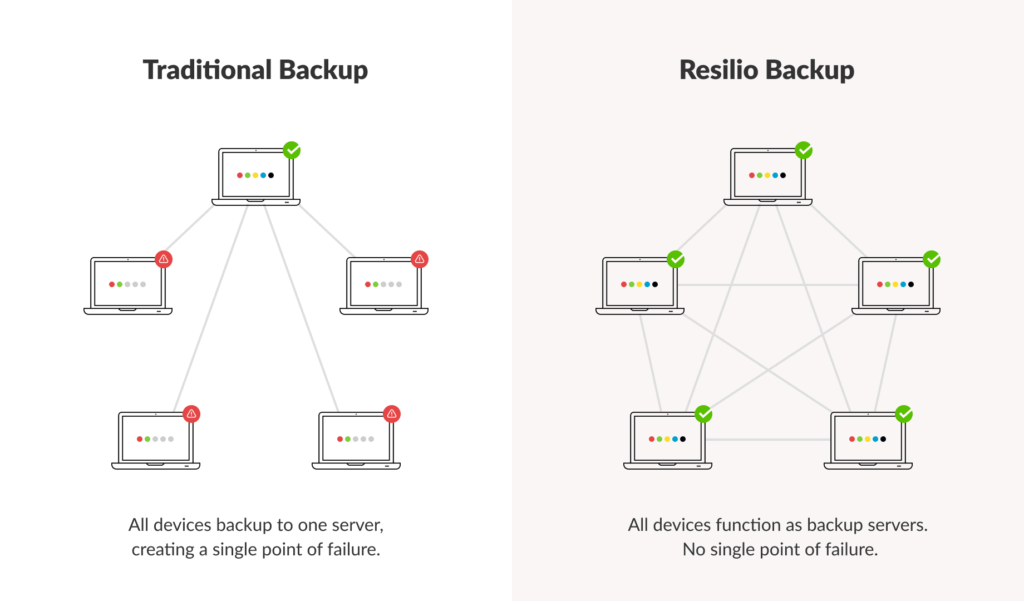

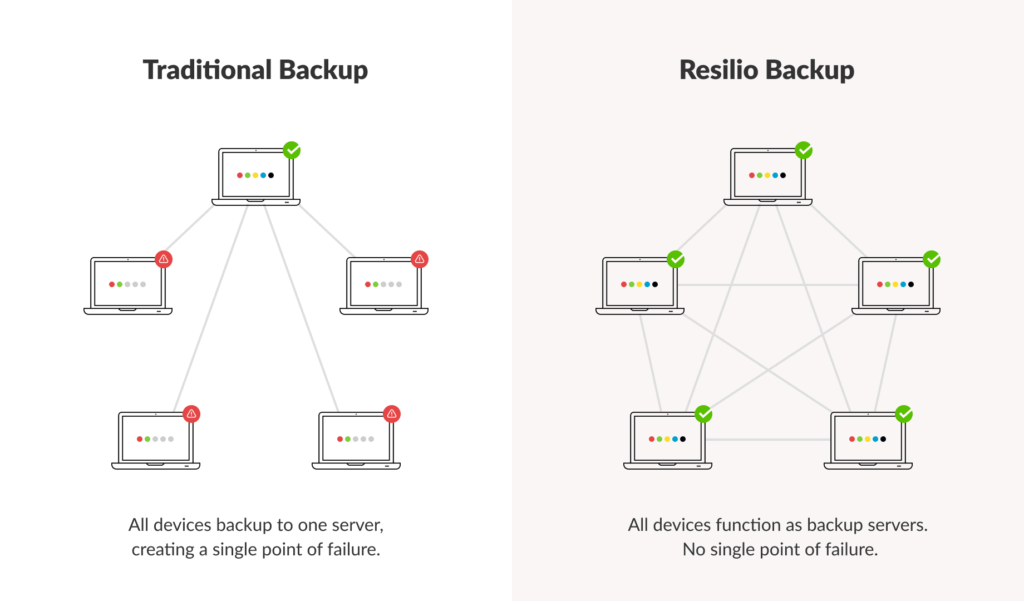

In traditional backup environments, data is copied and stored on one or more backup servers (either offsite, on-premises, on external drives, or on cloud storage) — aka the dedicated server model. In some cases, entire volumes of data must be both backed up and restored to access and restore an individual file. This is a costly and complex process. Especially in large production environments with lots of devices (mobile, desktop, server devices, etc.), where each and every device must be individually backed up. And this backup process may be part of a comprehensive methodology that’s been utilized for many years.

But when it comes to synchronizing larger files, many millions of files, across many devices — it proves extremely challenging to adequately protect all files.

And syncing data from all devices onto your servers places a high load on the server network, further reducing sync speed. To solve this issue, organizations have to allocate or over provision IT infrastructure resources (servers, storage, and network capacity).

With Resilio’s P2P architecture, file data is protected across all devices (or all of the devices included in your replication job to backup data). This means you’ll benefit from:

- A faster backup process: With every device replicating files simultaneously, backup occurs 3-10x faster than any dedicated server solution.

- Better network usage: Since backup is occurring across every device rather than just to a dedicated server, each device shares the load on the network and doesn’t overload the central server networking and HDD — which leads to faster synchronization.

Resilio also increases backup speed using:

File chunking: Files are split into several chunks that are replicated in parallel across multiple devices. As soon as a device receives a file chunk (or hash), it can begin sharing it with other devices. This replication architecture is highly resilient, and provides continuous data protection.

Delta deduplication: Resilio detects changes to files and replicates only the changed file chunks in order to perform highly efficient replication and help save storage space (Note: this is quite different from differential backup).

WAN Optimization: Transferring over WANs (wide area networks) with high RTTs (Round Trip Time) requires a transfer protocol that can maximize speed and minimize packet loss. Resilio’s proprietary transfer protocol, Zero Gravity Transport ™, overcomes these challenges and enables you to backup files and sync servers over long distances by:

- Sending packets periodically with a fixed packet delay in order to create a uniform packet distribution over time.

- Using interval acknowledgement for a group of packets (rather than each individual packet) that provides additional info about lost packets.

- Using a congestion control algorithm that calculates the ideal send rate by periodically probing the RTT.

- Retransmitting lost packets once per RTT to reduce unnecessary retransmissions.

Faster Recovery & High Availability

In a traditional backup environment with a client-server architecture, entire volumes of data are backed up and restored. This is costly, complex, and time consuming. And, in addition to producing all of the same speed challenges mentioned above (i.e., higher demand on the network, slower transfers, higher latency, etc.), it also introduces a single point of failure. Your backup server (or cluster of backup servers) must be online and available at all times in order for other devices to access the data they need.

Any problem with the hardware or network on your backup server can render your data unavailable to other devices. Server availability can be impacted by software problems, OS errors, hardware failures, routing errors, and network disruptions.

This adds more complexity as organizations are forced to invest in backup servers and networks and also ensure they properly plan software and hardware updates in advance to prevent service interruptions.

In Resilio’s P2P architecture, all devices (running Resilio agents) work in parallel to protect and replicate files. If a failure occurs on one device, other Resilio agents are still able to access the data. And each device can find the best client (i.e., the one closest to them) and request service from them. This eliminates single points of failure as well as the need to develop a complex system for maintaining availability.

Resilio is the best solution for Active-Active HA because everything is synced across multiple locations to make the data available to your entire organization. And as your system grows (i.e., more devices are added), it becomes even more resilient.

Organic Scalability

As your production environment grows (i.e., more devices, more files, larger transfers), it places greater demand on your backup system — which is a real problem for dedicated server backup.

Every server must be calibrated for the number of devices it supports. As the number of devices increases, you’ll need to increase the server’s CPU, memory, disk performance, etc. You may eventually be forced to add an additional backup server and design a system that balances the load between them. All of this requires large capital expense and heavy investment of manpower.

With Resilio’s P2P model, the more devices you add to your network, the more your infrastructure scales organically. More devices are replicating files and more devices are sharing the load in terms of network, CPU, and storage.

With P2P, more demand means more supply without the need for capital expenditure.

Centrally Managed & Secure

If you’re using a tool such as DFSR for server backup, then you have little insight into and control over how synchronization occurs in your environment.

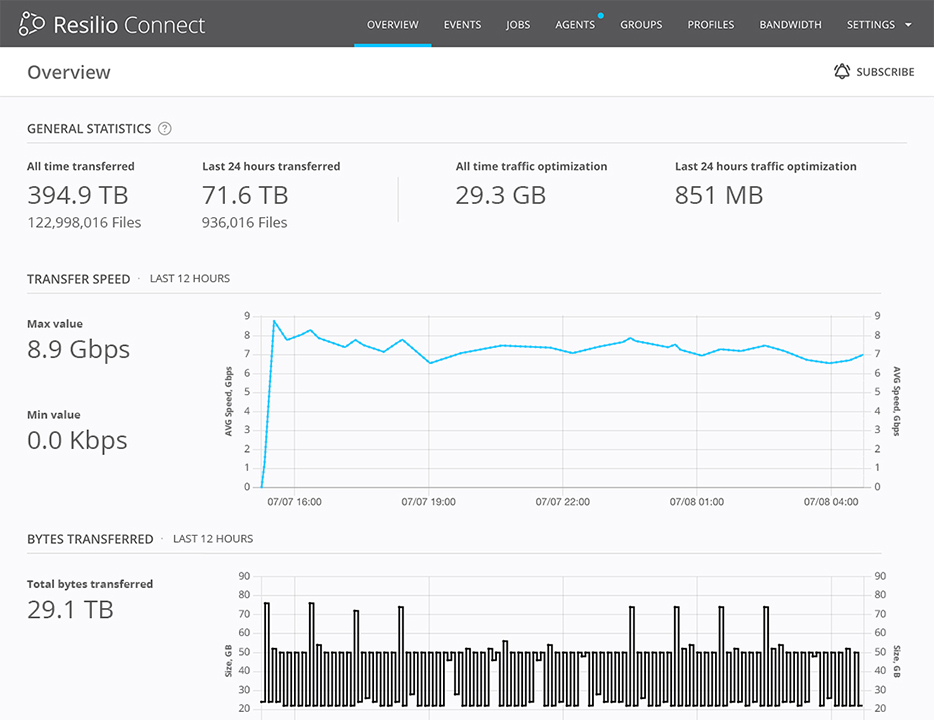

Resilio’s user-friendly dashboard enables you to monitor and manage data replication by providing detailed logs, real-time notifications, and the ability to:

- Set bandwidth usage limits for different times of the day or days of the week.

- Create bandwidth schedule profiles for different jobs and agent groups.

- Optimize performance by altering things such as buffer size, packet size, and more.

- Adjust file priorities, data hashing, and more to meet your needs.

- Script any Resilio functionality, manage agents, control jobs, provide real-time reports on data transfers and more with Resilio’s REST API.

And to keep your data secure in the face of malicious attacks (such as ransomware), Resilio provides state-of-the-art data security that has been validated by 3rd party cybersecurity experts.

Resilio uses mutual authentication to ensure data is only delivered to secure, designated endpoints, and no VPNs are needed (although they are supported). All files are encrypted in transit using AES 256 to prevent hacks or interceptions. Cryptographic data integrity validation prevents data loss or corruption. And Resilio uses one-time session keys to protect sensitive data.

Backup Your Data with Resilio

Resilio’s P2P-based server synchronization is the fastest, most-reliable real-time backup and recovery solution available. It can be deployed with your existing local or cloud backup storage service and will work on day one with no operational disruption — so you can get going fast.

To learn more about how Resilio can help you protect your files, schedule a demo here.